Situatie

Solutie

The Siri debacle has reportedly taught Apple a valuable lesson about pre-announcing features well in advance that are not yet in a working state. “Apple, for the most part, will stop announcing features more than a few months before their official launch,” Mark Gurman and Drake Bennett reported on Bloomberg in a piece discussing reasons for Apple’s AI woes.

They were right; this time around, the first developer betas of Apple’s “26” operating systems included almost all announced features. There is also no asterisk next to some features in marketing materials, so we don’t know whether anything is coming later in 2025 or 2026. However, if Gurman’s (usually reliable) reporting is anything to go by, three features planned for iOS 26 may not be ready this fall.

Gurman’s June 12 piece claims Apple plans to unleash an AI-infused version of Siri with personal context understanding and in-app actions as part of an iOS 26.4 update coming in March or April 2026. He added that Apple’s leadership team “has set an internal release target of spring 2026” for the belated Siri upgrade. The new features will permit the assistant to tap into personal data and on-device content, as well as perform complex actions by chaining together a series of in-app actions.

“A further revamped voice assistant, dubbed LLM Siri internally, is still probably a year or two away — at minimum — from being introduced,” he cautioned. A spring timeframe was semi-confirmed by software engineering chief Craig Federighi and marketing boss Greg Joswiak, whom Apple dispatched on a post-WWDC25 media tour to salvage Apple’s reputation. In a series of interviews, the executives reluctantly confirmed that an AI upgrade for Siri is arriving in 2026 instead of 2025.

Gurman reported ahead of WWDC25 that a redesigned Calendar app was coming to the iPhone, iPad, Mac, and Apple Watch without offering further details. Apple’s job posting later revealed that the company is seeking an engineer to “reimagine what a modern calendar can be across Apple’s platforms,” confirming the app is undergoing a major redesign.

Gurman’s pre-WWDC25 roundup states that an AI-enhanced Calendar app may not be ready at all until iOS 27. “It originally planned to introduce the software this year, but it’s been delayed and is now slated for the subsequent set of operating systems,” he said, adding that iOS 27 and macOS 27 are already in development.

Aside from the Liquid Glass design overhaul, there are no new features in the Calendar app on iOS 26, iPadOS 26, and macOS Tahoe 26. In fact, there haven’t been any major improvements to the Calendar app in quite a few years aside from the ability to create, view, edit, and complete reminders in the Calendar app that was introduced in iOS 18, iPadOS 18, and macOS Sequoia.

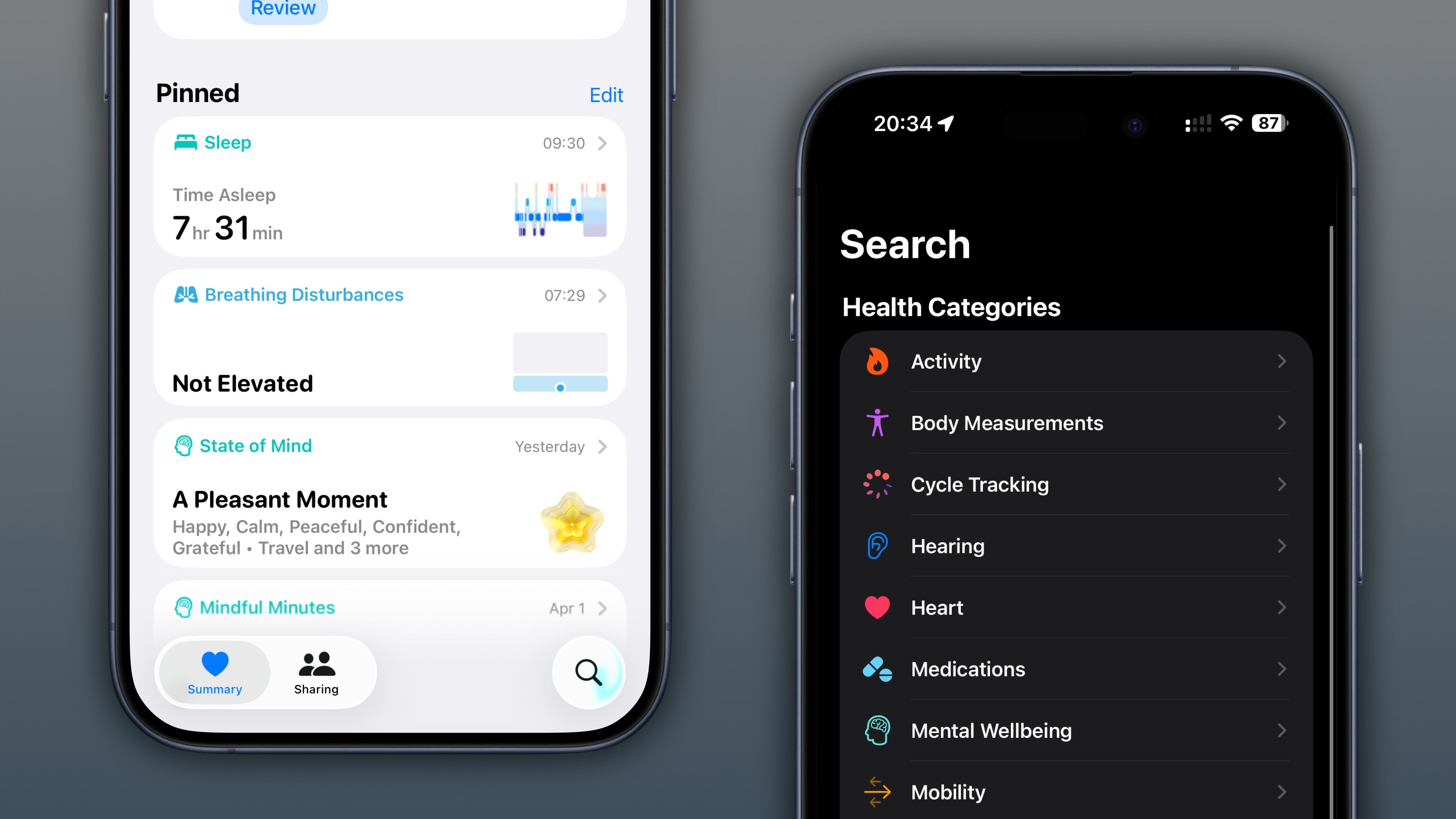

Gurman reported in March that Apple was going to overhaul the built-in Health app and bring an AI-powered doctor, adding Apple could bring “smaller changes” to the app this year. However, there are no new features in the Health app aside from Liquid Glass, as the major revamp seems to have been pushed back.

“Apple has also been working on an end-to-end revamp of its Health app tied to an AI doctor-based service code-named Mulberry,” he reported on the eve of the June 9 WWDC25 keynote. “Neither will be shown at WWDC and, due to delays, likely won’t be released at full scale until the end of next year at the earliest, as part of Buttercup.”

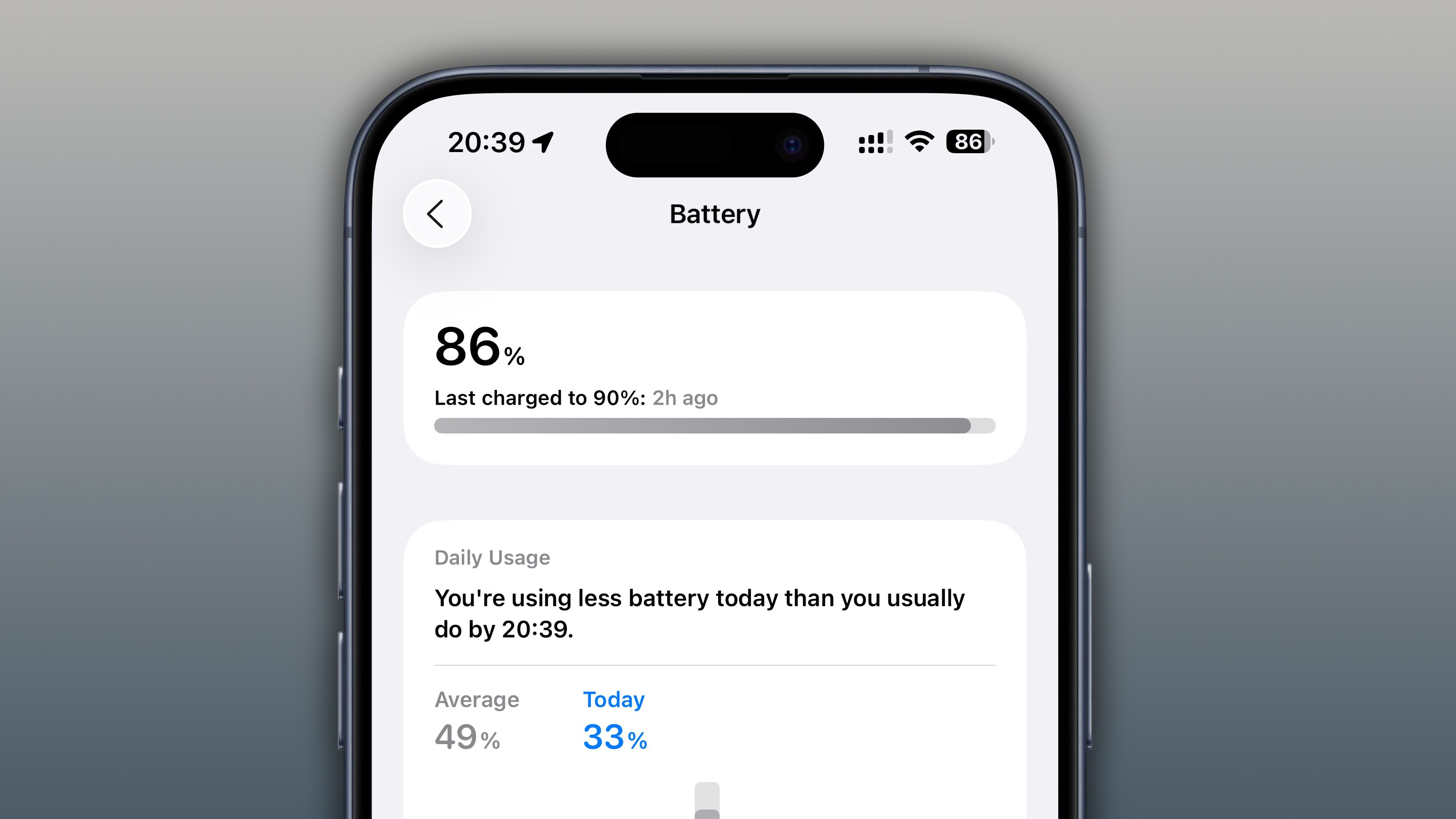

AI-Powered Battery Optimization

Gurman said earlier that iOS 26 would utilize Apple Intelligence to optimize battery performance on iPhones, but said the feature may be publicly available when the rumored ultra-thin iPhone 17 model drops later this year. Due to constrained space, the device should be equipped with a smaller battery, and AI could help extend run time.

His May report said the feature analyzes user behavior to “make adjustments” to conserve energy. It will also display the time remaining until full charge on the Lock Screen. Apple didn’t mention these features, but one of its press images has this battery estimate on the iPhone’s Lock Screen. Therefore, it’s probably coming via an update to iOS 26 later this year.

However, this feature won’t work on the iPhone 11 family or the second-generation iPhone SE and newer because the 3D effect on the Lock Screen requires at least the iPhone 12.

Vision Pro Unlocking: Face ID Required

The visionOS 26 operating system lets Vision Pro owners automatically unlock their iPhone (iOS 26 required) while wearing the headset. This only works on Face ID-capable iPhones. Sorry, iPhone SE fans!

Features Requiring at Least an iPhone 15 Pro

iOS 26 also brings more than half a dozen features that rely on Apple Intelligence, machine learning, and large language models running on-device. Like with the older Apple Intelligence features introduced in the past year, these capabilities require processing capabilities provided by the Apple A17 Pro chip or newer. In other words, the following features in iOS 26 and iPadOS 26 require at least the iPhone 15 Pro or iPhone 15 Pro Max (2024), seventh-generation iPad mini (2024), M1 iPad Air (2022), or M1 iPad Pro (2021).

Visual Intelligence on Screenshots

Visual Intelligence has gained onscreen awareness, meaning it can analyze anything you see on the iPhone’s screen. All you need to do is take a screenshot by simultaneously pressing the volume up button and the side button, and exit the Markup interface by hitting the pen icon at the top to reveal options to reveal the visual intelligence features.

Visual Intelligence may also highlight any objects you can tap. Or, you can draw a selection around anything on the screenshot, similar to Android’s Circle to Search feature.

iOS 26 bolsters Visual Intelligence with the immediate identification of new types of objects in iOS 26, including art, books, landmarks, natural landmarks, and sculptures, not just animals and plants like before. Visual Intelligence produces relevant results via Google Image search, but Apple said it’ll also be able to search using Etsy and other supported apps.

You can also upload the screenshot to ChatGPT and ask the chatbot to describe it. And if Visual Intelligence finds event information in the screenshot you took, you’ll see an option to add a new calendar entry.

Apple has upgraded translation capabilities across its platforms with live translation across the built-in Phone, Messages, and FaceTime apps on the iPhone, iPad, Mac, and Apple Watch.

Live translation uses Apple Intelligence; your device must meet the hardware requirements for Apple Intelligence to hear live translation spoken aloud on phone calls, see automatic translation in the Messages app as you type, and view real-time translation of foreign speakers on FaceTime calls.

AI Backgrounds and Poll Suggestions in Messages

The iPhone’s and iPad’s built-in Messages app on iOS 26 at long last brings chat backgrounds and polls. You can pick a different wallpaper for each chat by selecting the “Backgrounds” tab on the chat info screen, create a color gradient background, or use a built-in background or your own image from the Photos library. These features don’t require any special hardware and are available to all iOS 26 customers.

But iOS 26’s Messages app also lets you create a new AI chat wallpaper from scratch in Image Playground, which requires Apple Intelligence. In other words, Image Playground integration for AI chat background creation in Messages and getting poll suggestions in group chats only works on the iPhone 15 Pro and Apple silicon iPads.

Mixing Emoji Together With Genmoji

Genmoji, which lets you create custom emoji images based on people’s photos, short textual descriptions, and other parameters, now lets you mix two emoji together and fine-tune them further with descriptions on iOS 26 and iPadOS 26. Plus, you can customize your generated character by changing expressions or adjusting personal attributes such as hairstyle. These new capabilities are only available on devices running Apple Intelligence.

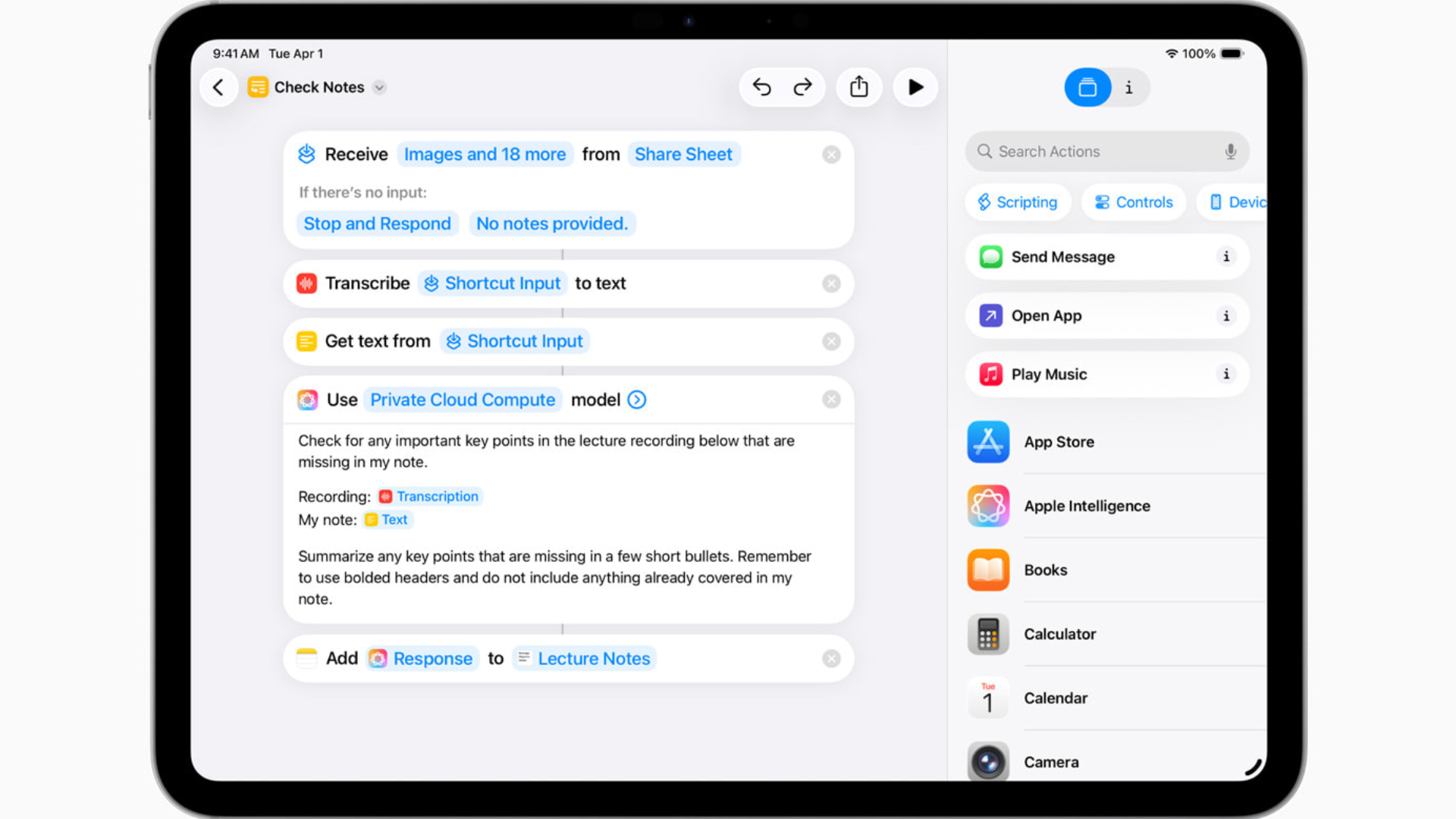

iOS 26 brings a new set of AI-powered actions in the built-in Shortcuts app, as well as dedicated actions for the Apple Intelligence features, such as summarizing text with Writing Tools or creating images with Image Playground. On top of that, you can use responses from ChatGPT or Apple Intelligence as input in your own automated workflows.

Apple said a student might want to tap into the Apple Intelligence model in their shortcut that compares an audio transcription of a class lecture to their notes to automatically add any missed key points or takeaways. All AI features in the Shortcuts app are only available on devices capable of running Apple Intelligence.

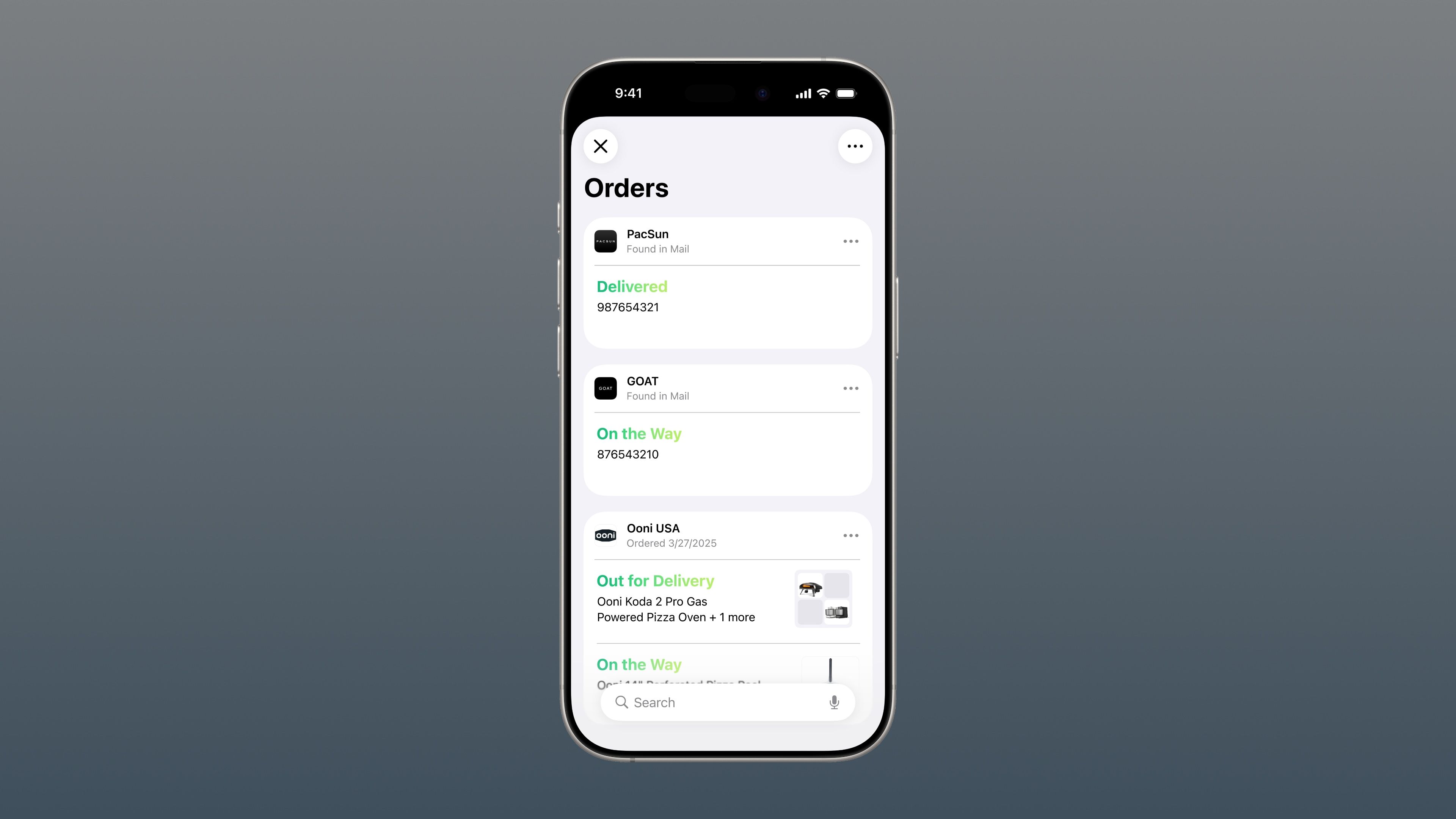

Order Tracking in the Wallet App

Reminder Suggestions and Automatic Categorization

Apple Intelligence on iOS 26 and iPadOS 26 powers suggestions for new tasks and grocery items in the built-in Reminders app. Apple Intelligence identifies emails you receive, websites you visit, notes you write, text found in Messages, and more. Based on those signals, the feature can automatically categorize task lists into organized sections in the Reminders app, if your device supports Apple Intelligence.

Features With Limited Language Support

Before we dive into those, remember that you may be able to use features unavailable in your language or country simply by making adjustments in Settings > General > Language and Region. I’ve long used this trick to reveal the News app which isn’t available where I live.

To use Apple Intelligence in one of the currently supported languages—English, French, German, Italian, Portuguese (Brazil), Spanish, Japanese, Korean, or Chinese (Simplified)—be sure to set both your device and Siri language accordingly. Apple Intelligence will pick up support for more languages, including Vietnamese, over the course of the year.

Live Translation in Phone, Messages, and FaceTime

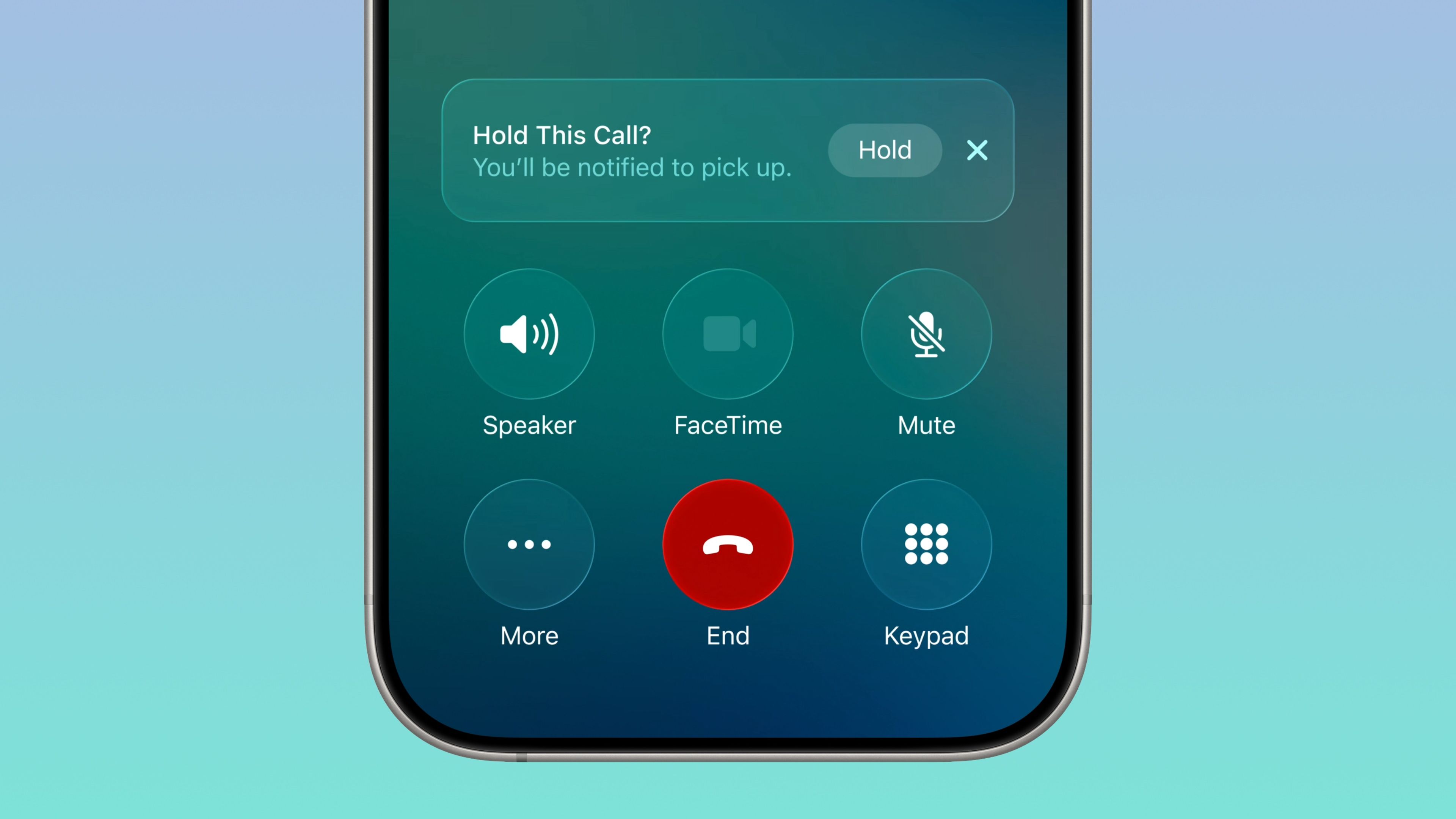

Call Screening

iOS 26, iPadOS 26, watchOS 26, and macOS Tahoe 26 bring screening tools in the Phone, FaceTime, and Messages apps on the iPhone, iPad, Apple Watch, and Mac to help eliminate distractions. It springs into action when you receive a call from an unknown number in the Phone app, asking the caller for their name and why they’re calling. You can see this information on the calling screen as it’s gathered, helping you to decide whether this is an important call you should answer or something you can safely ignore.

However, call screening only works for calls in these languages and regions: Cantonese (China mainland, Hong Kong, Macao), English (US, UK, Australia, Canada, India, Ireland, New Zealand, Puerto Rico, Singapore, South Africa), French (Canada, France), German (Germany), Japanese (Japan), Korean (Korea), Mandarin Chinese (China mainland, Taiwan, Macao), Portuguese (Brazil), and Spanish (U.S., Mexico, Puerto Rico, Spain).

Leave A Comment?