Situatie

Solutie

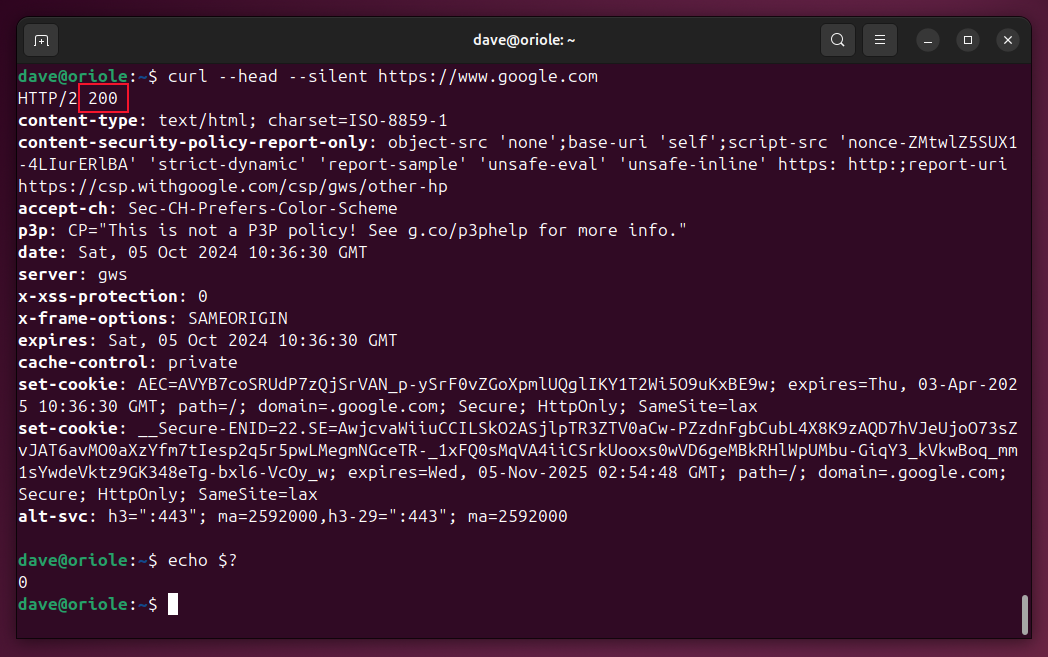

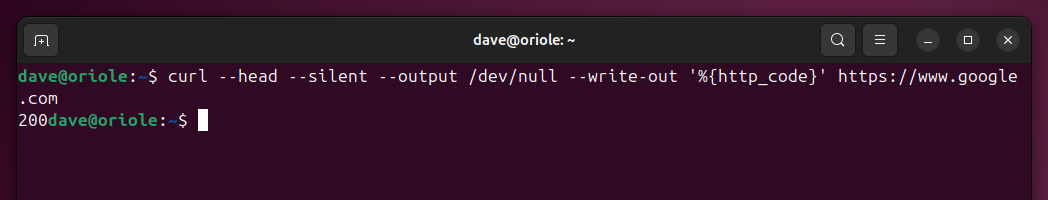

Using a web address or uniform resource locator (URL) in a script involves a leap of faith. There are all sorts of reasons why trying to use the URL might fail.

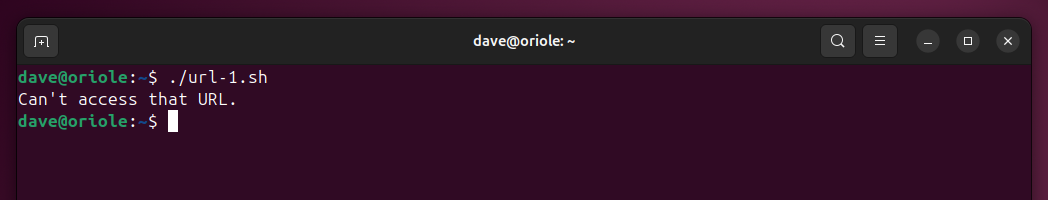

The URL could have typos in it, especially if the URL is passed as a parameter to the script.The URL might be out of date. The online resource it points to might be temporarily offline, or it might have been removed permanently.

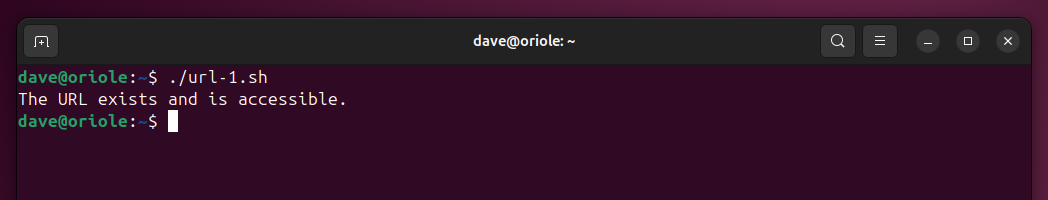

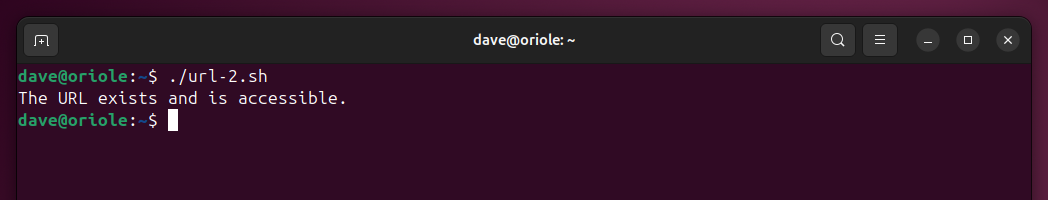

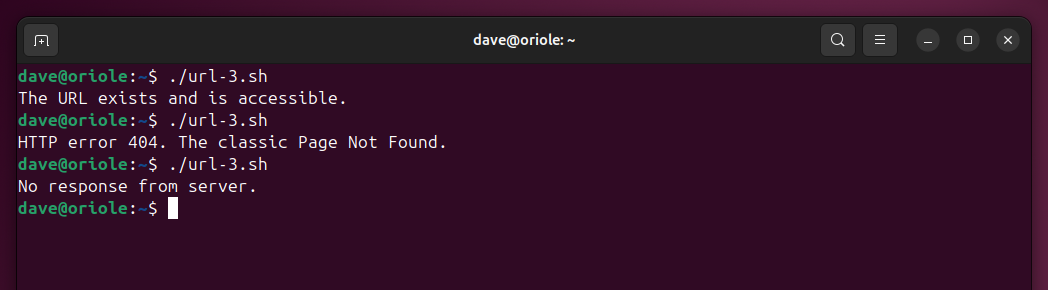

Checking whether the URL exists and responds properly before attempting to use it is good working practice. Here are some methods you can use to verify it’s safe to proceed with a URL, and gracefully handle error conditions.

Using wget to Verify a URL

The wget command is used to download files, but we can suppress the download action using its –spider option. When we do this, wget gives us useful information about whether the URL is accessible and responding.

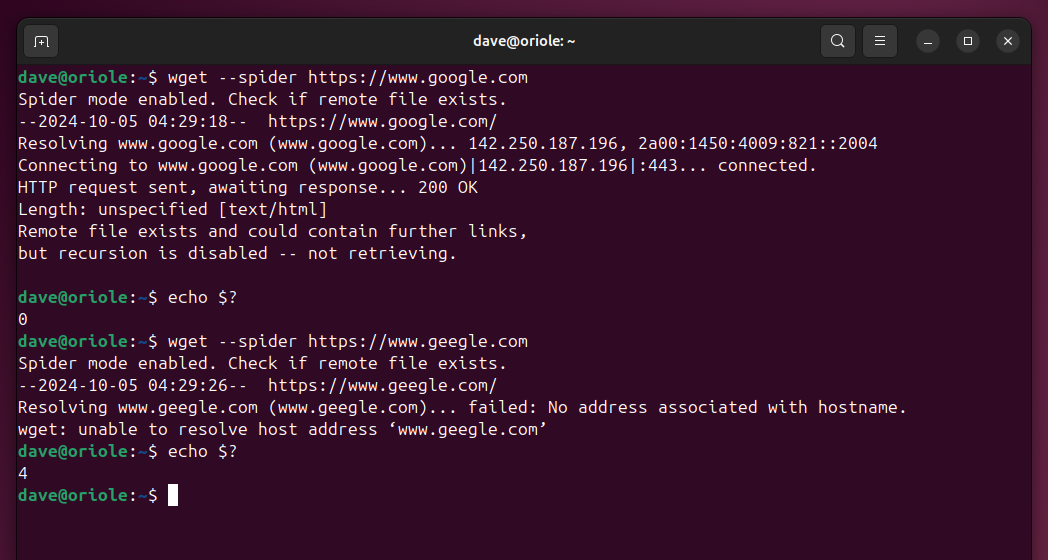

We’ll try to see whether google.com is up and active, then we’ll try a fictional URL of geegle.com. We’ll check the return codes in each case.

wget --spider https://www.google.com

echo $?

wget --spider https://www.geegle.com

echo $?

Leave A Comment?