Situatie

Solutie

GPT-4o vs. GPT-4 Turbo and GPT-3.5

We’ve covered the difference between GPT3.5 and GPT-4 Turbo in detail already, but the short version is that GPT-4 is significantly smarter than GPT-3.5. It can understand much more nuance, produce more accurate results, and is significantly less prone to AI hallucinations. GPT-3.5 is still very much relevant since it’s fast, available for free, and can still handle most everyday tasks with ease. As long as you’re aware that it’s much more likely to give you incorrect information.

GPT-4 Turbo had been the flagship model until the arrival of GPT-4o. This model was only available for ChatGPT Plus subscribers and came with all the fun toys OpenAI developed, such as custom GPTs and live web access.

Before we get into the actual features and capabilities, an important fact is that (according to OpenAI) GPT-4o is half the cost and twice the speed of GPT-4. This is likely why GPT-4o is available to both free and paid users, although paying users will get five times the usage limit for GPT-4o.

It’s broadly just as smart as GPT-4 Turbo, so overall this advancement is more about efficiency. However, there are some obvious and not so obvious advancements that go beyond simply being faster and cheaper.

What Can GPT-4o Do?

The big buzzword with GPT-4o is “multimodal”, which is to say that GPT-4o can operate on audio, images, video, and text. Well, so could GPT-4 Turbo, but this time it’s different under the hood.

OpenAI says that it’s trained a single neural net on all modalities at once. With the older GPT-4 Turbo, when using voice mode, it would use one model to convert your spoken words to text. Then GPT-4 would interpret and respond to that text, which was then converted back into a synthesized AI voice.

With GPT-4o it’s all one model, which has numerous knock-on effects on performance and abilities. OpenAI claims that the response time when conversing with GPT-4o is now only a few hundred milliseconds, about the same as a real-time conversation with another human. Compare this to the 3-5 seconds it took for older models to respond, and it’s a major leap.

Apart from being much snappier, it also means that GPT-4o can now also interpret nonverbal elements of speech, such as the tone of your voice, and its own response now has an emotional range. It can even sing! In other words, OpenAI has given GPT-4o some measure of affective computing ability.

The same sort of efficiency and unification applies to text and images, as well as video. In one demo GPT-4o is shown having a real-time conversation with a human being with live video and audio. Just like having a video chat with a human, it appears that GPT-4o can interpret what it sees through the camera, and make some pretty incisive inferences. ChatGPT-4o can also keep a substantially larger number of tokens in mind compared to previous models, which means it can apply its intelligence to much longer conversations and larger amounts of data. This will likely make it more useful for tasks like helping you write a novel, as one example.

Now, as of this writing, not all of these features are available to the public yet, but OpenAI has stated that it will roll them out in the weeks following the initial announcement and release of the core model.

How Much Does GPT-4o Cost?

GPT-4o is available to both free and paid users, although paying users will get five times the usage limit for GPT-4o. As of this writing, the cost of ChatGPT Plus is still $20 a month, and if you’re a developer well, you’ll have to look up the API costs for your needs, but GPT-4o should be much cheaper compared to other models.

How to Use GPT-4o

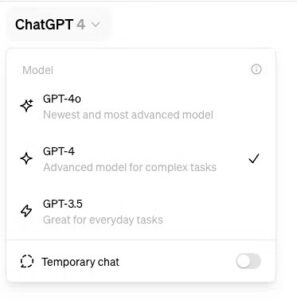

As I mentioned above, GPT-4o is available to both free and paid users, but not all of its features are online right away. So, depending on when you read this, the exact things you can do with it will vary. That said, using GPT-4o is dead simple. If you’re using the ChatGPT website as a ChatGPT Plus subscriber, just click the drop down menu that displays the current model name and select GPT-4o.

If you are a free user, you will be defaulted to ChatGPT-4o until you run out of your allocation. At which point, you’ll be dropped back to version 3.5 until you have more requests available.

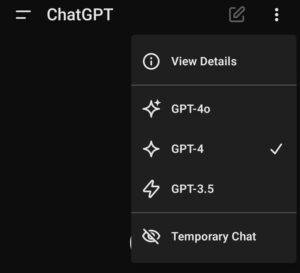

If you’re using the mobile app, simply tap the three dots and choose the model from there.

Leave A Comment?